Offline Voice Control: Building a Hands-Free Mobile App with On-Device AI

Imagine you’re a field engineer repairing equipment on a remote site: your hands are full, the environment is noisy, and connectivity is spotty. In such constrained environments, hands-free voice control can be a game-changer. Voice commands let users interact with mobile or embedded apps without touching the screen, improving safety and efficiency. However, traditional voice assistants often depend on cloud services, which isn’t always practical in the field. This post explores how to build an offline, real-time voice control system for mobile apps using on-device AI. We’ll use Switchboard, a toolkit for real-time audio and AI processing to achieve reliable voice interaction entirely on-device.

Why Offline Voice Control Matters

Offline voice control offers several key advantages over cloud-based solutions:

Low Latency: Processing speech on-device eliminates network round-trips. The result is near-instant response time, which is crucial for a natural user experience. For example, OpenAI’s Whisper ASR running locally has significantly reduced latency since no cloud server is involved. Real-time interaction feels snappier without the 200ms to 500ms overhead of sending audio to a server and waiting for a reply.

Reliability Anywhere: An offline voice UI works anytime, anywhere: even in a basement, rural area, or airplane mode. There’s no dependence on an internet connection, so the voice commands still function in low or no-connectivity environments.

Cost Efficiency: Cloud speech APIs may seem inexpensive per request, but costs add up at scale (and can spike with usage.) With on-device speech recognition, once the model is on the device, each additional voice command is essentially free. There are no hourly or per-character fees for transcription or synthesis, making offline solutions far more cost-effective for high-volume or long-duration use.

Privacy & Compliance: Keeping voice data on-device means sensitive audio never leaves the user’s control. Cloud-based voice assistants send recordings to servers, raising concerns about data breaches or violating regulations. Offline processing mitigates these risks; no audio streams over the internet, which is especially important in domains like healthcare, defence, or enterprise settings with strict data policies. By design, an on-device voice assistant provides strong privacy guarantees.

In short, offline voice control gives you speed, dependability, and user trust that cloud-dependent solutions often can’t match. It lets your app’s voice features work in real time under all conditions, without recurring service fees or privacy headaches.

Problems with Cloud-Based Voice UIs

Conversely, cloud-reliant voice user interfaces come with several pitfalls that affect both developers and users:

Connectivity Issues: A cloud voice UI simply fails when offline. If a technician is in a dead zone or a secure facility with no internet, cloud speech recognition won’t function: no network, no voice UI. Even with connectivity, high latency or jitter can degrade the experience (e.g. delays or mid-command dropouts).

Ongoing Costs: Relying on third-party speech services means ongoing usage fees. What starts cheap in prototyping can become expensive at scale, or if you hit tier limits. For instance, transcribing audio via a popular cloud API might cost on the order of $0.18 per hour of audio; costs that accumulate every time a user talks to your app. This can hurt the viability of voice features in a high-usage app.

Compliance and Privacy Risks: Many industries have regulations that forbid sending user data (especially voice, which may contain personal or sensitive info) to external servers. Cloud voice services introduce data residency and security concerns, since audio is streamed and stored outside the device. There’s an inherent risk in transmitting customer conversations to the cloud. Meeting GDPR, HIPAA, or internal compliance standards becomes much harder with a cloud pipeline.

Battery Drain: Constantly streaming audio to the cloud can also impact battery life. The device’s radios (Wi-Fi or cellular) must stay active, using power for data transmission. In contrast, on-device processing can be optimized to use the device’s local compute resources more efficiently. While running AI models locally does consume CPU, modern on-device models can be tuned to balance performance and energy use. Avoiding the network can actually save power in scenarios where the alternative is an always-on uplink.

The bottom line: cloud voice UIs may work for casual consumer use, but they can stumble in mission-critical or resource-constrained scenarios. An app meant for field work, offline environments, or privacy-sensitive tasks demands an on-device solution to ensure it’s fast, reliable, and secure under all conditions.

Demo Use Case: Voice Commands for App Control

To make this concrete, let's consider a demo use case: a voice-controlled iOS movie browsing app. Users are frequently multitasking: eating, exercising, or simply relaxing, making hands-free operation highly valuable. Voice control also serves those with motor impairments. We'll build an app that lets users navigate and interact with content via voice. For example, the user could say: "Next movie" or "Like this one."

In this scenario, the app would interpret the speech command, navigate to the next movie or mark it as liked, and provide visual feedback. All of this needs to happen offline in real time, with low latency for smooth interaction and high accuracy to avoid misrecognitions.

This demo encompasses a complete voice interaction loop:

Voice capture: continuously listen for the user's speech

Speech recognition (STT): transcribe the spoken command into text

Command parsing: understand the intent (navigate, like, etc.) and trigger the action

Take action: update the UI state in the app

Visual feedback: show recognized commands to confirm understanding

We'll build this with Switchboard, which provides a convenient way to set up an on-device voice pipeline for iOS apps.

Why Switchboard?

Switchboard is a framework of modular audio and AI components built for real-time, on-device processing. It allows you to assemble custom audio pipelines (called audio graphs) with minimal integration effort. For our offline voice control app, Switchboard brings several benefits:

On-Device, Real-Time Processing: All voice data stays on the device, and inference happens locally with minimal latency. Switchboard’s nodes leverage efficient libraries; for example, the Silero VAD model can analyze a 30ms audio frame in under 1ms on a single CPU thread. OpenAI’s Whisper model (for STT) is integrated via C++ for speed, achieving staggeringly low latencies on CPU even on mobile hardware. This means voice commands can be recognized and responded to essentially in real time, without needing any cloud compute.

Cross-Platform Simple Integration: Switchboard provides a unified API across iOS (Swift), Android (Kotlin/C++), desktop (macOS/Windows/Linux in C++), and even embedded Linux. You can integrate it as a library or even design your audio graph visually in the Switchboard Editor and deploy it to different platforms. Our focus here is iOS, but the same graph can run on Android or a Raspberry Pi with minimal changes. This flexibility is a boon for teams targeting multiple environments.

All-in-One Voice Pipeline: Out of the box, Switchboard includes nodes for the core tasks we need: Voice Activity Detection (VAD), Speech-To-Text (STT), Intent processing via an LLM or rule engine, and Text-To-Speech (TTS). Under the hood it uses proven open-source models: Silero VAD to detect speech segments, OpenAI Whisper (via whisper.cpp) for transcription, and Silero TTS for voice synthesis (or other TTS engines as extensions). There’s even support to incorporate a local LLM (e.g. Llama 2 via llama.cpp) to handle more complex intent logic. Because these components are pre-integrated as Switchboard nodes, you don’t have to stitch together separate libraries or processes, they all run in one seamless audio graph.

No GPU Required: Switchboard’s AI nodes are optimized for both GPU and CPU execution, often using quantized models and efficient C++ inference. You do not need a dedicated GPU or Neural Engine to run this pipeline. For example, Whisper’s tiny/base models run comfortably on modern mobile ARM CPUs, and the entire pipeline (VAD → STT → LLM → TTS) can run on a typical smartphone or embedded board in real time. This makes the solution viable on devices like iPhones, Android phones, or edge IoT hardware without specialized accelerators. It also simplifies deployment, no extra drivers or cloud instances needed.

In short, Switchboard provides the building blocks to implement offline voice control quickly and robustly. We get to focus on our app’s logic (the “open ticket” functionality) rather than low-level audio processing or model integration details. Next, let’s look at the architecture and how these pieces connect together.

Architecture Overview

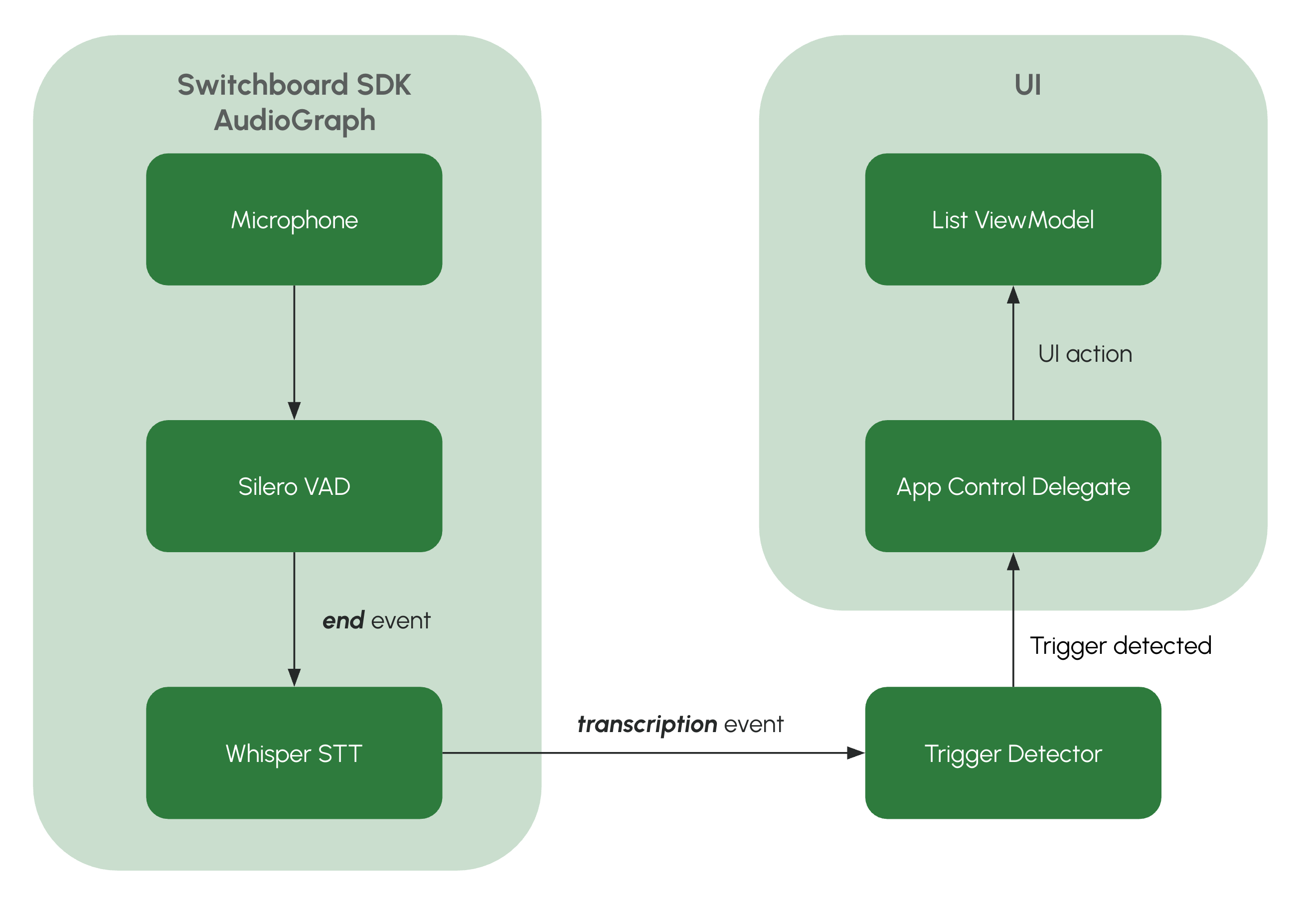

To build our voice control system, we set up an audio graph in Switchboard with the following key nodes:

SileroVADNode: Listens to the microphone audio and detects when the user starts and stops speaking. This voice activity detector filters out background noise and avoids sending silence to the speech recognizer.

WhisperNode: Takes in audio and produces text transcripts using the Whisper speech-to-text model. This node gives us the recognized command, converting audio like "next movie" into the string "next movie".

All these nodes run on-device and are connected in a pipeline. The overall flow is: Microphone → VAD → STT → Trigger Detection Logic → App Action.

The microphone stream is fed into both the VAD and STT components (in Switchboard we use a splitter node to branch the audio). The VAD continuously monitors the audio but only when it detects actual speech (voice activity) do we proceed. When the user finishes speaking (VAD detects the end of utterance), it triggers the Whisper STT node to transcribe just that segment of audio. Once we have the transcription of users speech we can detect trigger keywords for different actions We can write custom logic that matched matches keywords like "next", "previous", "like", or movie titles, triggering appropriate UI actions.

You can grab the full example project from this Github repository: voice-app-control-example-ios.

Going Further

The voice control example can easily be extended and modified to fit variety of use cases by just changing the trigger keywords and associated UI updates. We can further improve and refine the system in many ways:

Better Noise Handling: Background noise can be a challenge with voice control applications. Switchboard provides noise suppression nodes like RNNoiseNode (a denoising ML model) and others that you can put in the pipeline before the STT node. You can also tune the Silero VAD’s sensitivity or use a noise gate to ignore constant hums. Selecting the right Whisper model size for accuracy vs speed is also important if the domain has lots of noise or technical jargon; larger models like Whisper Small or Medium might give better accuracy at the cost of some speed, so you’ll need to balance according to your target market.

Custom Wake Words: Our current setup is always listening, which might not be ideal for battery or user experience. You can incorporate a wake word (like “Hey AppName”) to activate voice processing only when needed. Switchboard can integrate with Picovoice Porcupine or other wake word detectors as nodes. This way, the VAD+STT only runs after the wake word is detected, saving power and avoiding unintended commands. In an embedded scenario, a wake word can be extremely low-power compared to running full ASR constantly.

Multiple Intents and Dialogues: We demonstrated one command, but you can extend the intent handler to support multiple voice commands (open ticket, close ticket, lookup manual, etc.). For complex interactions, consider using an LLM to manage a dialogue. Switchboard’s LLM integration could maintain context; e.g. the user could ask "What’s the status of unit 42?" after opening the ticket, and the LLM node (with some prompt engineering) could fetch that info and reply via TTS. This would turn your app into a more conversational assistant, all offline. Just be mindful of the device limitations when adding more AI tasks.

Error Handling and Retries: In practice, you’ll want to handle cases where the speech wasn’t clear or the STT confidence is low. You might implement a simple retry logic: if the transcription confidence or intent match is below a threshold, ask the user to repeat (using TTS to say "Sorry, could you repeat that?"). Whisper doesn’t provide confidence scores out-of-the-box, but you can infer it or use heuristics (e.g. no intent identified). Ensuring a smooth fallback will improve usability.

Multi-Language Support: Whisper models can handle many languages. If your app needs to support multi-lingual users, you could set the WhisperNode to auto-detect language or explicitly load models for the target languages. Switchboard allows switching out models or running multiple STT nodes if needed (though running two large models at once on device might be heavy). Similarly, you can use TTS voices for different languages. All without cloud services. This is great for apps that must operate in remote regions with various local languages (imagine an agriculture app for remote villages, etc.).

Deployment on Embedded Devices: While our example was mobile-focused, the same pipeline can run on an embedded Linux board or even inside a desktop app. You might deploy an offline voice-controlled interface on an industrial device or a kiosk. Switchboard’s C++ API lets you integrate into such environments. The absence of cloud dependencies means you just have to ship the model files and binary, it will run entirely on-premise. Do monitor memory and CPU usage on lower-end hardware and use the smallest models that meet your accuracy needs.

Performance Optimization: Monitor CPU and memory usage, especially on older devices. Use smaller Whisper models (tiny/base) for better performance, or larger ones (small/medium) for improved accuracy based on your needs.

There is a lot of room to tailor the solution to your specific use case. The modular nature of Switchboard means you can plug and play components (swap Whisper STT with another model, or Silero TTS with say a custom TTS) and tweak the graph configuration. Switchboard’s documentation is a great resource to learn about available nodes and best practices for real-time audio graphs.

Available Now

Hands-free voice control is no longer limited to big-tech assistants. With on-device AI, any mobile or embedded app can have a reliable voice interface that works offline. By using Switchboard’s SDK, we integrated state-of-the-art speech models (for VAD, STT, and TTS) into a cohesive pipeline, all running locally. The result is an app that’s faster (low latency), cheaper (no cloud fees), more secure (user data never leaves the device), and more robust (works in a faraday cage or the middle of nowhere). These benefits are game-changing for developers building solutions in industrial, healthcare, military, or any scenario where cloud connectivity isn’t guaranteed or desirable.

We demonstrated a simple voice controlled application that can be operated via voice, but the possibilities are endless: from voice-controlled IoT appliances, to offline voice assistants for vehicles, to mobile apps that users can operate while exercising or driving. With on-device voice AI, you control the experience end-to-end, and users get the convenience of voice interaction with full privacy and reliability.

If you’re ready to add offline voice capabilities to your own app, give Switchboard a try. The SDK is actively maintained, and the official documentation has detailed guides and examples to get you started. You can start with a simple command or two and gradually build up a powerful voice UX tailored to your domain. Empower your users to talk to your app anywhere, no internet required. Happy coding, and happy talking!

Want to see what else we're building? Check out Switchboard and Synervoz.